Connect your LLM account

Florentine.ai works as a bring your own key model, so you need to provide your LLM API key (OpenAI, Deepseek, Google or Anthropic) in your API requests.

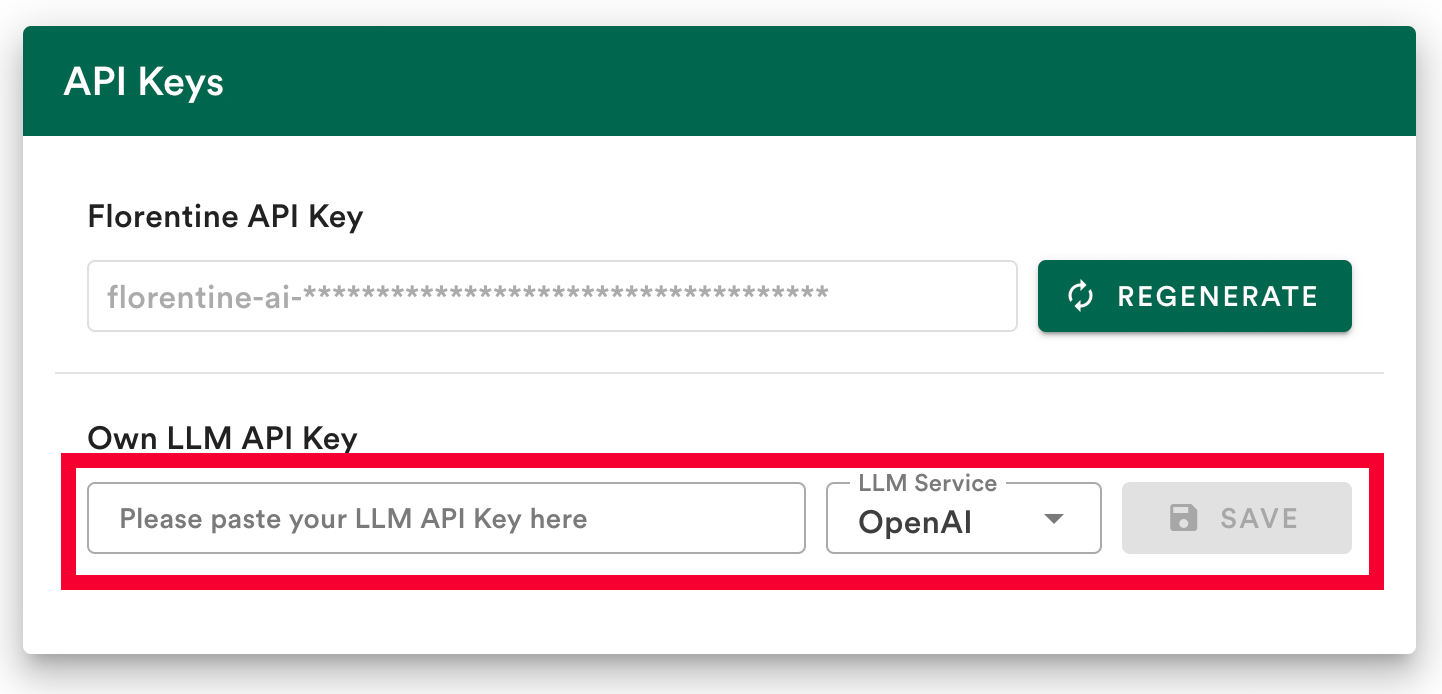

Option 1: Save your LLM key in the dashboard

The easiest way to connect to your LLM provider is to save your LLM API key in your Florentine.ai dashboard.

- Add your API key.

- Select your LLM provider (

OpenAI,Deepseek,GoogleorAnthropic). - Click

Save.

Option 2: Provide your LLM key in API requests

If you prefer not to store the key in your Florentine.ai account or want to use multiple LLM keys, you can pass the key in your API request.

| Parameter | Description | Allowed Values |

|---|---|---|

llmService | Specifies the LLM provider to use. | "openai","deepseek","google","anthropic" |

llmKey | Your API key for the provided LLM service. | A valid API key string |

Set llmService and llmKey as part of your json request object. For the Node.js client you add these values to the class constructor object:

ts

import { Florentine } from "@florentine-ai/api";

const FlorentineAI = new Florentine({

florentineToken: "<FLORENTINE_API_KEY>",

llmService: "<YOUR_LLM_SERVICE>", // one of: "openai", "deepseek", "google", "anthropic"

llmKey: "<YOUR_LLM_API_KEY>"

});

const res = await FlorentineAI.ask({ question: "<YOUR_QUESTION>" });bash

curl https://nltm.florentine.ai/ask \

-H "content-type: application/json" \

-H "florentine-token: <FLORENTINE_API_KEY>" \

# llmService must be one of: "openai", "deepseek", "google", "anthropic"

-d '{

"llmService":"<YOUR_LLM_SERVICE>",

"llmKey":"<YOUR_LLM_API_KEY>",

"question": "<YOUR_QUESTION>"

}'Please note

If you provide an llmKey inside the request, it will override any key stored in your account.